http://www.nutty.ca/?page_id=352&link=shadow_map

Shadow Mapping

Shadow mapping is a hardware accelerated technique for casting shadows in a 3D scene. It has become the industry standard method for casting shadows due to its simplicity, its rendering speed, and its ability to produce soft shadows. This article will go over the shadow mapping technique as well as several filtering algorithms that are demonstrated in the interactive WebGL demo.

The Basics

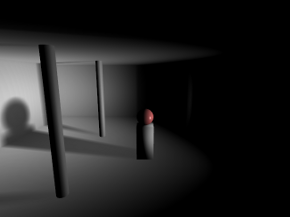

Shadow mapping has a very basic process. The idea is to render the scene from the light's point of view and record the distance between the vertex and the light source in a texture object. This texture is referred to as a depth map. Objects closer to the light source will have a small depth value (closer to 0.0) and objects furthest from the light source will have a larger depth value (closer to 1.0). There are two ways to record depth values. One is to use the projected z-coordinate (non-linear) and the other is to use linear depth. Both of these processes are described later. Once you generate this depth map, you then render your scene as you normally would from your camera's point of view. In your fragment shader, you perform lighting calculations as you normally would. As a final step, you determine if that fragment is in shadow by projecting its vertex from the light's point of view. If the distance between that vertex and the light source is greater than what is recorded in the depth map, then that fragment must be in shadow because something closer to the light source is occluding it. If the distance is less than what is recorded in the depth map, then that fragment is not in shadow and is probably casting its own shadow elsewhere in the scene. The stages are illustrated below.

Left: As seen from the camera.

Middle: As seen from the light source.

Right: Depth as seen from the light source.

Getting Started

There's a couple caveats you need to be aware of before getting started with shadow mapping. Firstly, OpenGL ES 2.0 (WebGL) does not support the depth component texture format. In other words, you cannot render the depth values directly to texture. You must calculate this yourself and render the result to the colour buffer. This is not necessarily a bad thing. Traditionally the z-coordinate from a projected vertex was used for depth map comparisons in shadow mapping. The problem however is there is a loss of precision with this method, leading to something called "shadow acne".

Shadow acne is what happens when you perform comparisons with floating point values that are neck and neck with each other, which is a general problem in all forms of digital computing. What happens is the rounding error causes the shadow test to pass sometimes and fail other times, creating random spots of false shadows in your scene. One way to combat this issue is to apply a small offset to your polygons when rendering the depth map or a small offset to your shadow map depth calculations; however this can lead into another problem called "peter panning".

Image of Peter Pan and his shadow. From Walt Disney's "Peter Pan", 1953.

The term "Peter Panning" came from the fictional character Peter Pan, whose shadow could detach from Peter and either assist or jest with him at times. As shown from the screenshot above, shadow acne has been removed at the expense of shadows appearing disconnected from their sources due to using a large polygon offset.

One way to minimize both shadow acne and peter panning is to use linear depth. What this means is that instead of using the projected Z-coordinate, we instead calculate the distance between the vertex and the light source in view space. You still need to map the result between 0.0 and 1.0, so you would divide the value by the maximum distance a vertex can have from its light source (ie: the far clipping plane). You use this same divisor later in the shadow test to determine if a vertex is inside or outside of a shadow. The result is a depth value with much better and equal precision throughout the viewing frustum. It doesn't outright eliminate shadow acne, but it helps. When combined with filtering algorithms such as VSM or ESM (explained later), it's virtually a non-issue.

Rendering the Depth Map

OpenGL ES 2.0 (WebGL) does not support the depth component texture format. We need to render the depth values into the RGBA fragment. This is performed in the depth.vs and depth.fs shaders. You want to pass in the light source projection and view matrices. If you're using perspective projection, you should use an aspect ratio of 1.0 and an FOV of 90 degrees. This will produce an even square with good viewing coverage. You can optionally use an orthographic projection for directional light sources like the sun. In the fragment shader, after you compute the distance and divide it by the far clipping plane, you need to store the floating point value into a 4x4 byte fragment. How do you do this? You use a carry-forward approach.

Example

Depth value = 0.784653

R = 0.784653 * 255 = 200.086515 = 200 (carry fraction over)

G = 0.086515 * 255 = 22.061325 = 22

B = 0.061325 * 255 = 15.637875 = 15

A = 0.637875 * 255 = 162.658125 = 162

The depth value 0.784653 is stored in an RGBA fragment with the values (200, 22, 15, 162).

When you need to retrieve the depth value, you simply reverse the operations. This is done as follows.

Depth = (R / 255) + (G / 255

Depth = (200 / 255) + (22 / 65025) + (15 / 16581375) + (162 / 4.2x10

Depth = 0.784313 + 0.000338 + 0 + 0

Depth = 0.784651

You can see from the original depth value and the unpacked depth value that there is an error of 0.000002, which is quite acceptable. You'll also note that the green and alpha channels have a huge divisor, which makes them practically irrelevant in restoring a floating point value. As such, you should expect to get at least 16 bit precision and up to at most 24 bit precision using this method.

You can find the code for packing a floating point value into an RGBA vector in the depth.fs shader. There is however one more process involved not outlined in the math above. GPUs have some sort of floating point precision or bias issue with the depth values you store in the pixel. While it's not documented anywhere, the consensus is to subtract the next component's value from the previous component to correct the issue. That is, R -= G / 255, G -= B / 255, and B -= A / 255. You will notice this being performed in the depth fragment shader.

Shadow Testing

Once you have your depth map, you need to render your scene from the camera and check if each fragment is in shadow or not by comparing its vertex depth value projected by the light source with the depth value stored in the depth map. In the fragment shader shadowmap.fs, you will see these comparisons at the bottom of the main function.

In order to know what pixel to sample in the depth map, you need to project your vertex using the light's projection and view matrix.

Where

if ( depth > rgba2float(texture2D(DepthMap, VL.xy)) )

{

// Shadow pixel

colour *= 0.5;

}

else

{

// Pixel not in shadow, do nothing

}

Filtering

The problem with standard shadow mapping is the high amount of aliasing along the edges of the shadow. You also cannot take advantage of hardware blurring and mipmapping to produce smoother looking shadows. To get around these issues, several filtering algorithms are discussed below.

PCF

Percentage closer filtering is one of the first filtering algorithms invented and it works by adding an additional process into the standard shadow mapping technique. It attempts to smooth shadows by analyzing the shadow contributions from neighbouring pixels.

The above example shows a 5x5 PCF filter. It doesn't use bilinear filtering so you can see how each neighbouring pixel is sampled. PCF can't operate on a blurred depth map. It requires an expensive 5x5 (or whatever kernel you wish to use) blurring operation for each fragment. While it's not recommended to use PCF, it does have the advantage of maintaining accuracy. It doesn't blur and thus "fudge" the depth map in order to get smoother shadows. With VSM and ESM, it's possible that blurring the depth map can produce false shadows, particularly along corners. It's a small tradeoff for an increase in speed.

VSM and ESM

Variance Shadow Maps and Exponential Shadow Maps were designed to eliminate the performance penalty involved in smoothing shadows using the PCF algorithm. In particular, they didn't want to perform blurring during the render stage. They wanted to take advantage of the separable blur algorithm, as well as anti-aliasing and mipmaps/anisotropic filtering. The example below demonstrates the results achieved by blurring the depth map and using one of the aforementioned filtering algorithms.

No blurring (standard shadow map look and feel).

3x3 blurring.

5x5 blurring.

The separable blurring technique (also known as the box-blur) gets its name from the way it performs blurring. In the traditional sense, blurring is performed using a convolution filter. That is, an N x M matrix that samples neighbouring pixels and finds an average. A faster way to perform this activity is to separate the blurring into two passes. The first pass will blur all pixels horizontally. The second pass will blur all pixels vertically. The result is the same as performing blur with a convolution filter at a significantly faster speed.

Left: Unfiltered image.

Middle: Pass 1, horizontal blurring applied.

Right: Pass 2, vertical blurring applied.

What's unique here is that the lower resolution depth map you use, the more effective the blurring. A 256x256 depth map for instance can produce a very nice penumbra, whereas a 1024x1024 depth map requires a large kernel to blur it sufficiently enough. You have to find the right balance between resolution and blurring. To much of either can hinder performance.

VSM and ESM are virtually identical in terms of output quality, but there is one significant difference between the two. VSM requires you store both the depth and the depth squared in the depth map. This requires a 64 bit texture, which is not available on older hardware or even within OpenGL ES 2.0 (WebGL). As such, you need to compute and store both values as 16 bit into the RG and BA channels of the pixel. While the precision loss isn't that bad, a proper implementation would require more memory than what ESM requires.

The VSM formula is presented below.

S = ChebychevInequality(M1, M2, depth)

Where

Chebychev's inequality function is what produces a gradient between 0.0 and 1.0 depending on whether or not the fragment is in shadow. The function is provided below.

float ChebychevInequality (vec2 moments, float t)

{

// No shadow if depth of fragment is in front

if ( t <= moments.x )

return 1.0;

// Calculate variance, which is actually the amount of

// error due to precision loss from fp32 to RG/BA

// (moment1 / moment2)

float variance = moments.y - (moments.x * moments.x);

variance = max(variance, 0.02);

// Calculate the upper bound

float d = t - moments.x;

return variance / (variance + d * d);

}

The maximum variance you see in this function is configurable. I chose a value of 0.02 because that worked well within the 16 bit precision error. Adjusting this value can have both a positive and negative effect on your shadows. If you find you are getting a lot of shadow acne, you need to raise the maximum value; otherwise you can lower it until such point where shadow acne is not apparent. Once you have your shadow value calculated from Chebychev's inequality function, you multiply the value against the current fragment colour. Pixels not in shadow will return a value of 1.0 and pixels within shadow will return a value less than 1.0.

An alternative to VSM is ESM. ESM is probably one of the best ways to currently filter shadow maps. It's memory efficient in that it only requires you store the depth value in the depth map and you can still take advantage of blurring the depth map, generate mipmaps, anisotropic filtering, etc. The ESM formula is presented below.

S =

Where

Like VSM, the value returned from this function produces a gradient between 0.0 and 1.0. You take this value and multiply it against the current fragment colour. Pixels not in shadow will return a value of 1.0 and pixels within shadow will return a value less than 1.0.

Point Lights

Point lights perform the exact same calculations as directional light sources, except you have to work with cubemaps. A point light may require up to six sides (left, front, right, back, top, and bottom) to receive shadows. This can add up to 6 times more calculations, which can have a significant impact on performance. If dealt with intelligently, you can deduce which sides of the cube are visible and perform only calculations on those faces. When possible, you should take advantage of multiple render targets to quickly produce depth cubemaps. OpenGL ES 2.0 (WebGL) unfortunately supports only one colour target, so that's not an option. Nevertheless, you should use point light shadows sparingly.

The above screenshot shows the depth map for each side of the cubemap. When performing depth map comparisons, you need to find out which side in the cube you will be working with. To do this, calculate the vector from the light source to the vertex. This will point to the location in the cubemap containing the depth sample to compare against. The final result is a room with shadows emitted on all sides.

Cascaded Shadow Maps (CSM) and Parallel-Split Shadow Maps (PSSM)

This topic is not covered here, but it's one of the last remaining puzzles in shadow mapping. As you've seen by now what directional and point light shadow maps look like, the one problem not yet solved is what to do for large scale scenes. When you're outdoors and you can see the horizon, using a single depth map doesn't make much sense. You would never be able to accommodate all the detail in a single texture. This is where cascading comes into play. The idea is to split your viewing frustum into pieces, where each piece will have its own depth map to perform shadow comparisons. For a detailed review of these processes, check out Cascaded Shadow Maps on MSDN as well as GPU Gems 3 Chapter 10 Parallel-Split Shadow Maps.

'3D그래픽' 카테고리의 다른 글

| Unreal engine 4 - Interior level test WIP (0) | 2014.04.04 |

|---|---|

| Android에서 Adreno Profiler 사용하기 (0) | 2012.09.17 |

| 도움이 될만한 책을 반견한 것 같다! (0) | 2012.07.03 |

| Per-fragment lighting에서 alpha 값은 어디서 와야(?) 하는가!? (0) | 2012.06.18 |

| Normal을 구할 때, 방향(?)을 예측(?)하는 방법??? (0) | 2012.06.16 |